Andreea Munteanu

on 2 October 2024

Canonical is committed to enabling organizations to secure and scale their AI/ML projects in production. This is why we are pleased to announce that we have joined the Open Platform for Enterprise AI (OPEA), a project community at the Linux Foundation focused on enterprise AI using open-source tooling.

What is OPEA?

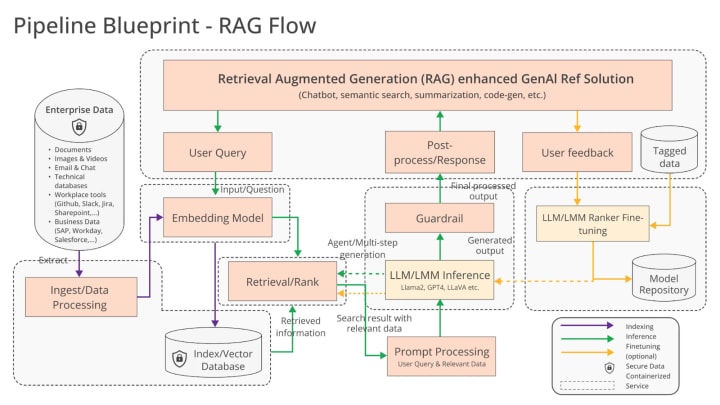

OPEA is a project community which provides an ecosystem orchestration framework for GenAI technologies and workflows.

OPEA helps organizations to develop and deploy GenAI solutions, using performant open source tooling. They provide guidance for enterprises to build a scalable and secure infrastructure for their AI projects, from architectural blueprints to educational material.

In addition, OPEA works to upskill the AI practitioners who work behind the models, through events across a range of platforms aimed at providing the guidance necessary to advance their projects beyond PoC (proof of concept).

Canonical is excited to join cross industry members of OPEA, such as AMD, DominoLab and Intel. Together, we’ll work to help lower the barriers to entry that developers face when conducting initial experimentation with GenAI. We’re looking forward to bringing OPEA’s architectural blueprint to life and providing tooling for the whole ML lifecycle, including orchestration, workload automation and bare metal provisioning services. As part of OPEA, we’re eager to show the power of what open source technology can do for AI.

“We welcome Canonical joining OPEA,” says Arun Gupta, VP & GM Open Ecosystem Initiatives at Intel Corporation. “Ubuntu plays a key role in the AI market, especially for the developer ecosystem. I am convinced that the project will benefit from their holistic view that encompasses tools for data preparation, development, enterprise grade deployments, support options and more, and that are based on open source frameworks and supported by the open source ecosystem.”

Source: GenAI blueprint from OPEA

Enterprise AI: challenges and opportunities

Google reported that 64% of organizations are looking to adopt GenAI (source). As the industry evolves and enterprises move beyond experimentation with their initiatives, they need to think seriously about the challenges they will face. That includes:

- Security: Whether we talk about the security of the model, data or tooling, organizations should consider it a top priority. This includes security maintenance of the packages underneath the model, harnessing confidential computing to secure models for heavily regulated organizations, and protection of rebooting during training with livepatching capabilities.

- Scalability: Whereas experimentation is a low hanging fruit, organizations often struggle to move beyond it. In order to scale up their AI strategy, they need to consider computing power and the optimization opportunities related to it, tooling needs and integrations, and the ability to offer such infrastructure for larger teams.

- Portability: Organizations often start in one environment or with one silicon vendor, but as projects mature, they diversify their portfolio and often end up running in hybrid or multi-cloud environments. Keeping the same AI software stack, regardless of the scenario, enables enterprises to accelerate project delivery and avoid spending time on unnecessary upskilling on different platforms and migrating from one solution to another.

Chris Schnabel, Silicon alliances ecosystem manager for Canonical, commented on how OPEA is tackling these challenges: “Enterprises face a large number of challenges to moving their AI projects past the experimental phase and into production environments. This includes addressing data privacy and security, effectively scaling their infrastructure, and ensuring that regulatory standards can be met. As a community, OPEA helps define best practices for deploying AI, and Canonical can provide the practical tools which ensure that enterprises can easily adopt, secure and maintain these AI deployments over the long term.”

Enterprise AI as part of Canonical’s commitment

At Canonical, we believe that organizations will succeed with their AI initiatives by building AI infrastructure that enables them to run the entire ML lifecycle in a secure, scalable and portable manner. This is why we believe in providing an end-to-end solution that runs on different environments and is available at different scales. In order to make this a reality for developers, we recently announced the release of the Data Science Stack, an out-of-the-box solution for data scientists and machine learning engineers, published by Canonical. It runs seamlessly from AI workstations to data centers. This provides a single environment for development, lowering the barrier entry for AI practitioners. OPEA members will benefit from DSS to experiment faster, lowering the barrier entry for GenAI use cases. They will quickly spin up their ML environment and validate their ideas, accelerating project delivery.In addition, beyond our commitment to providing open source software, organizations can also make use of Canonical’s enterprise support and managed services. You can learn more about building, deploying and managing your ai projects with open source at https://ubuntu.com/ai and https://canonical.com/solutions/ai.